How we can secure critical infrastructure against zero-day hacks

A post by Dr Tingting Li, Research Associate at the Institute for Security Science & Technology.

As detailed in the recent Alex Gibney documentary Zero Days: Nuclear Cyber Sabotage, the Stuxnet worm caused havoc in an Iranian nuclear facility by exploiting unknown – and hence unprotected – weaknesses in the computer control system; so called zero-day weaknesses.

At Imperial ISST we’ve shown that the risk of a cyber-attack like Stuxnet being successful can be reduced by strategically defending the known weaknesses. We can model the relative risks in the system without foreknowledge of potential zero-day weaknesses, and maximise security by focusing defences on higher impact risks.

I’m very grateful to have recently won the CIPRNet Young CRITICS award for this research, which was supported by RITICS with funding from EPSRC and CPNI.

Exploitability of an Industrial Control System

Shown in Figure 1, a typical attack on an industrial control system (ICS) involves a number of steps. Each requires the attacker to exploit a security vulnerability to progress to the next, and each vulnerability can be a zero-day weakness or a known weakness.

These weaknesses can be attributed an ‘exploitability’ value reflecting the sophistication and required attacking effort; those with higher exploitability likely cause a higher risk to the overall system.

With regard to an acceptable level of risk, we define the tolerance against a zero-day weakness by the minimal required exploitability of the weakness to cause the system risk to exceed the acceptable level.

Modelling attacks

We created a Bayesian Risk Network based on three types of nodes. Complete attack paths are modelled by target and attack nodes, and the damage of successful attacks are evaluated against requirement nodes.

We modelled common types of assets in Industrial Control Systems as four nodes (T1-T4) in the Bayesian Network; a Human-Machine Interface, a Workstation, a Programmable Logic Controller and a Remote Terminal Unit. We also select five common weaknesses (w1-w5) and five defence controls (c1-c5) from the ICS Top 10 Threats and Countermeasures.

The weaknesses are assigned an exploitability value and are attached to a relevant single attack node between a pair of targets, giving a single attack edge. Each attack node hence becomes a decision-making point for attackers to choose a known or zero-day weakness to proceed. The defence controls reduce the exploitability of a weakness according to their relative effectiveness.

This allows us to model zero-day exploits without knowing details about them, and focus on analysing the risk caused by zero-day exploits.

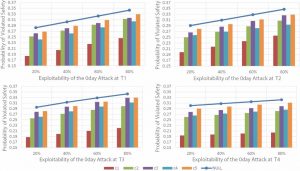

Four trials were run on the network. In each a zero-day exploit of scaling exploitability (e.g. 20%, 40%, 60% and 80%) is added to each target, and defence controls are individually deployed. The updated risks are then calculated, as shown in the four charts in Figure 2. The upper-curve shows the trend of the risk with no defence control, while the bars show the mitigated risk from each control deployed.

What did we learn?

In a nutshell, that zero-day exploits at earlier steps in the attack chain create greater risk, and deploying defences at these points can significantly reduce this risk.

The zero-day exploit at asset T2 (in this example the work station asset) is the most threatening as it brings the greatest increment to the risk, while asset T4 is the least threatening. This is because T2 influences more subsequent nodes. Without defence controls, a zero-day exploit of 31% exploitability at T2 will reach the critical level. Applying defence control C2 however increases this to 72% exploitability.

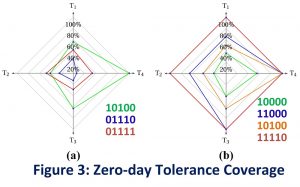

In addition to single controls we also investigated the most effective combinations, i.e. defence plans, represented by bit vectors of inclusion/exclusion. Plan 10011 for example indicates application of c1, c4 and c5, and exclusion of c2 and c3.

We looked at the impact of each plan on the maximal risk when the zero-day exploit at each target reaches its maximal exploitability, the risk reduction over different targets, and tolerance. The tolerance value at each target can be viewed as a radar chart as shown in Figure 3.

It’s interesting to see in Figure 3a that deploying more controls does not necessarily guarantee a larger tolerance coverage. Defending against more widespread weaknesses would generally produce more risk reduction across the network. Weaknesses near the attack origin tend to have a greater impact on the risk of all subsequent nodes, and so applying defences against earlier attacks are relatively more effective.

Dr. Tingting Li is a Research Associate at the Institute for Security Science & Technology, Imperial College London. She obtained her PhD degree in Artificial Intelligence from University of Bath in 2014. Her research is primarily in cyber security for ICS, logic-based knowledge representation and reasoning, multi-agent systems and agent-based modelling.

Email: tingting.li@imperial.ac.uk