A post by Professor Emil Lupu, Associate Director of the ISST and Director of the Academic Centre of Excellence in Cyber Security Research.

It’s often reported that we can expect 30 billion IoT devices in the world by 2020, creating webs of cyber-physical systems that combine the digital, physical and human dimensions.

In the not too distant future, an autonomous car will zip you through the ‘smart’ city, conversing with the nearby vehicles and infrastructure to adapt its route and speed. As you sit in the back seat, tiny medical devices might measure your vitals and send updates to your doctor for your upcoming appointment. All of this will rely on IoT devices; internet-connected sensors and actuators dispersed throughout our physical environment, even inside our bodies.

On the minds of many, but not so often reported, is that by bringing the digital interface into the system you make it reachable from anywhere on the internet, and therefore, also to malicious actors. And by taking the computer out of a secure room, and putting it for example at street level, you make it vulnerable to someone physically compromising it. Can we trust these cyber-physical systems?

Sensors can lie

So what might these malicious actors do? At Imperial College London, we’ve shown that sensors which, for example, monitor for fires, volcano eruptions and health signals, can be made to lie about the data they report. This can have drastic consequences.

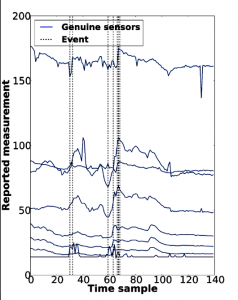

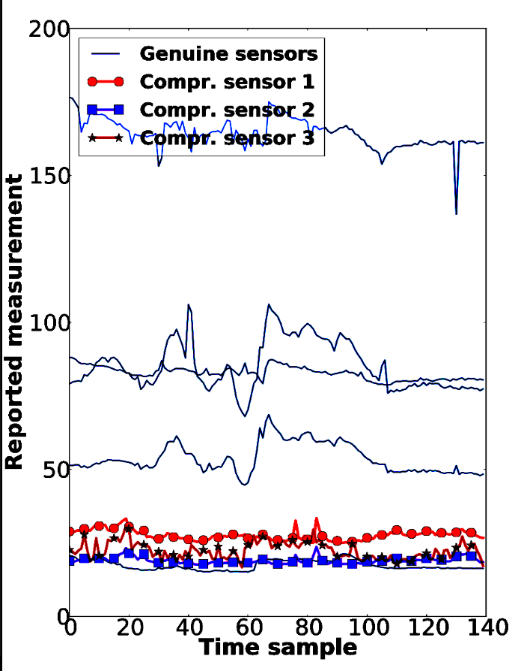

The below charts show bedside-sensor data from a healthcare setting. On the first chart, each vertical, dotted line represents an event when the health of the patient has been at risk. By compromising three sensors, as shown on the second chart, we can cancel all of these points and mask the events.

The consequences of this happening in the real world could be fatal. So we have started working on techniques to detect when sensors might be lying, by measuring the correlations between the measurements of different sensors.

Catching a lie

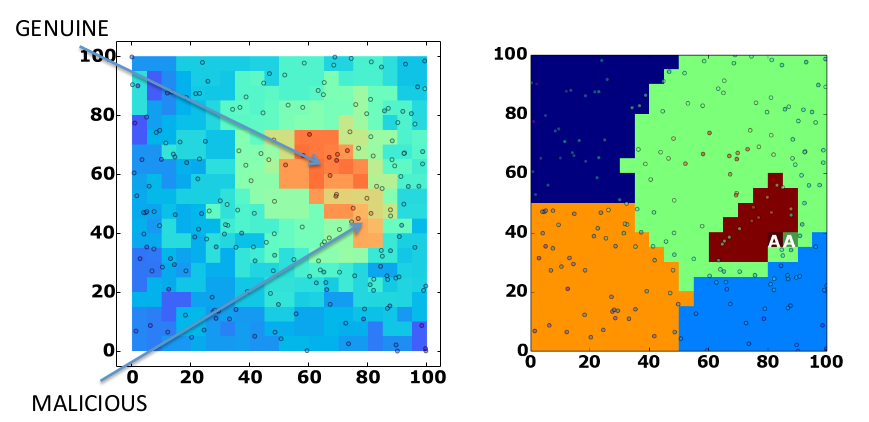

Using our techniques with fire sensors, we could detect a fake fire event even when it was located next to a genuine fire event. The below charts show the fire detection system – the chart on the right clearly highlights the fake event.

This also allows us to detect masked events – when someone is trying to hide an intrusion – and is powerful enough to distinguish these from benign false events.

Finally, we can also characterise and identify the sensors that are likely to be compromised, and calculate how many compromised measurements, or how many compromised sensors, a network can tolerate.

Corrupting artificial intelligence

But the risk doesn’t end there. To be useful to us, cyber-physical systems like driverless cars, or implanted medical devices, will use artificial intelligence techniques to learn how we behave and how the physical space around us changes.

The learning requires data from sensors, and as we’ve shown these can be compromised. Learning from this corrupted data could lead to our driverless cars, smart infrastructure and health monitors making the wrong decisions, with dramatic consequences.

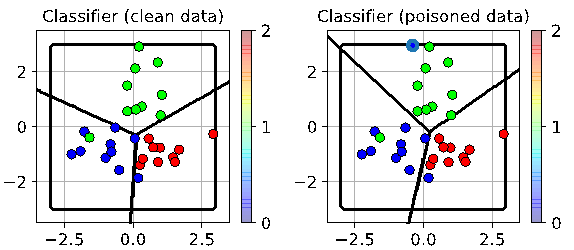

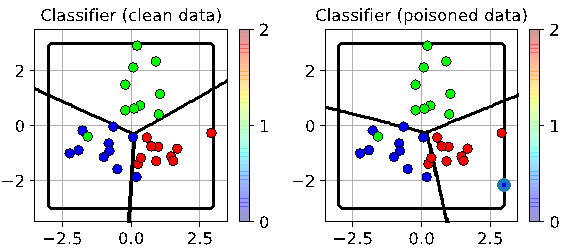

This corruption is illustrated in the below diagrams of a machine learning algorithm, which classifies data into groups. You can see how the classification boundary changes when a single additional data point is inserted. In this case the point introduced seeks to maximise the overall error.

In this below case, which would be called a targeted attack, the introduced point seeks to make the red points be recognised as blues.

What stood out in our experiments was the low number of spoofed data points required to introduce fairly substantial error rates into the algorithm.

New attacks, new approaches

So far we’ve talked about the issues around compromised sensors. But there are many other issues that arise when we combine digital, physical and human dimensions in cyber-physical systems.

If this was just about the physical security, or just the cybersecurity, we’d be okay. We have the tools and techniques for reasoning about physical security, cybersecurity, and to talk about the trust we have in people. But we don’t really have techniques to analyse security for attacks that combine these three elements. So what do we need?

Firstly, with such attacks, we need to be able to perform risk evaluation in real-time. If some parts of the system have been compromised, what is the risk to the other parts of the system? Unfortunately, techniques for aggregating risk information don’t always scale very well, but research done within my group is addressing this through Bayesian techniques.

Secondly, we need to abandon the idea that we can entirely protect the system. Cyber-physical systems have much larger attack surfaces, and we should assume that the system will be compromised at some point. Instead, we need to develop the techniques that enable us to continue to operation in the presence of compromise to the system, or a part of it.

Thirdly, we need to design security techniques that allow us to combine the digital, physical and human elements. These all represent a threat for each other, but they can also complement each other in the protection of the system. The physical element can, to some extent, physically protect the digital and human elements. The human element can teach the cyber element how to behave, in order to monitor the physical space. And the cyber element can also monitor the behaviour of the humans involved in the system.

Success relies on trust, trust needs security

Artificial intelligence and the IoT have been much heralded as disruptive technologies with benefits that permeate society. Trust in these technologies will be the ultimate driver of their societal acceptance and overall success. If the systems are not secure, then they are not trustworthy.

Cyber-physical systems are already with us. We need to urgently address the security issues now to prevent loss of trust as we become more and more dependent on them.

Dr Emil Lupu is Professor of Computer Systems at Imperial College London. He leads the Academic Centre of Excellence in Cyber Security Research, is the Deputy Director of the PETRAS IoT Security Research Hub, and Associate Director of the Institute for Security Science and Technology.