Hello! To help you recover from all the New Year’s festivities and to and to procrastinate from the Physics that I am meant to be doing for my course, I thought that today I would write a blog that hopefully explains a Physics mystery. (Cue dramatic music…)

If you’ve read my other blogs, you will know that entropy has been bugging me for a long time, because I can’t think of a satisfactory way to explain it. Even after writing a whole essay basically on that subject, I am not quite sure that I have nailed it. However, thinking about it, there are other concepts that I would struggle to explain, but am sure that I understand well enough, for example energy. I know what energy is, and I am very comfortable using it in calculations, but any one sentence summary of it to someone who has never heard of it before is unsatisfactory. Energy: it does things. All things. No energy, no things. Hmm. Maybe not.

The best way to explain energy that I can think of is instead to give some examples. Energy isn’t a philosophical concept after all, it can be measured and quantified. So to explain energy, I would probably think of a pendulum. At the one side of its swing, it is completely stationary for an instant, as all its movement energy has been converted into gravitational potential energy and then as it swings back down towards the centre it converts this back into motion, getting faster and faster. So the exchange of energy allows the pendulum to do things- rise up in the air or get faster.

A similar example for entropy might be heat flow from a hot cup of coffee into the cold surroundings. The energy starts off in a nice useful, concentrated form, which you could just about use to power a heat engine and do something useful, and degrades into a spread-out, useless form. The difference between energy and entropy though, is that I can imagine being able to measure all the types of energy in a situation—I could measure the heat loss and the excitation of the particles around the pendulum and its speed and the height it travels to. But though thermodynamics tells us how to measure the entropy of an exchange of heat, how do you measure it in interactions where transfer of heat isn’t involved?

This problem, identifying different kinds of entropy seems to have been a problem for other people too. If you are fan of popular Physics books, you have probably heard of Maxwell’s Demon. This was a thought experiment that was meant to show a problem with entropy, which, as the second law of thermodynamics states, is always meant to increase.

It shows how heat can be made to flow from a cold place to a hot place (the opposite of what we usually experience i.e. our lovely hot cup of coffee cooling down) if there is a little demon who only lets through the hottest air molecules through to the coffee and leaves the coolest behind. This means that the coffee can stay hot and the second law of thermodynamics is broken. However, that is like pushing a trolley along the floor and when it stops shouting: ‘Eureka! Energy conservation is broken!’ simply because you don’t know that the heat and noise produced in the trolley’s movement are forms of energy too. This is reasonable, because those messy end kinds are not at all like the smooth, nice movement type of energy you put in.

Similarly, Maxwell’s demon doesn’t break the second law of thermodynamics if you take into account the ‘information entropy’ that the little demon contained. It turns out the demon’s knowledge about the heat of each particle, contains an entropy of its own, and him putting this knowledge into use ensures that the set-up still contributes to a rise in entropy. How exactly this information entropy is measured, it doesn’t really matter for now—let’s just assume we can measure it and find a calculation that puts it into the same units as the ‘heat entropy’ from before (for example how mgh and 1/2mv2 are both in Joules for different kinds of energy.)

Anyway. That introduction got out of hand. The thing I wanted to write about—the ‘mystery’ was that the second law says that entropy is always increasing and one day we will get to a state of maximum entropy—the ‘Heat Death of the universe’ and things will be dull forever. The problem with this is that it implies that the universe came into being in a state of incredibly low entropy, and that doesn’t make any sense.

For a start, that is incredibly, incredibly unlikely. Why should it be that way? The lower the entropy, the more unlikely the state, so we seem to have hit the ultimate jackpot with the entropy levels of our early universe.

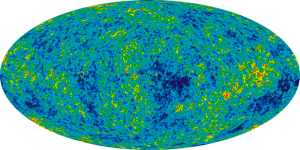

On the other hand, we know our universe wasn’t like this! People have measured the cosmic microwave background, and the conditions of our early universe are incredibly boring. Things are spread out, they are flat, and any variations are absolutely tiny. This looks like what we think the end of our universe will be. Heat all spread out. Particles all spread out. But if this is a true equilibrium, if the universe had started in equilibrium, it should have stayed there. There would have been no stars, no planets and no us. So what’s missing from the picture?

It turns out we need to be Maxwell’s-demon-hunters again. We are missing another type of entropy- gravitational. Though thermal entropy is low when things are spread out at an even temperature, gravitational entropy is in fact still very high. Gravity’s low entropy state is when things are packed together.

This building of gravitational structure seems to be breaking the second law of thermodynamics, but it does so at the expense of its environment- clouds of particles grouping themselves together also expel and give angular momentum to particles that don’t get sucked into them, as well as exporting heat. So gravity still obeys the second law, as it must, and its ability to increase entropy by clumping things together ensures that the universe is an interesting place.

You might notice that this still leaves us with our first problem. Why did the universe pop into being in this unlikely configuration?

I’ll leave that mystery for another time.

(I found out about this topic in a statistical physics lecture, but if you would like to find out more, there are hundreds of fairly accessible papers on it like ‘Life gravity and the second law of thermodynamics’, by Charles H. Lineweaver and Chas A. Egan, and I think Roger Penrose has published some popular science books discussing the evolution of entropy in the universe further which I must read sometime.)

This is awesome, really interesting stuff!! I want to do Physics now 😀 also “A cup of hot mystery” – lol!

I find entropy confusing too. Would be interested in reading your essay on the topic. Am currently reading a few books on thermodynamics including one called “Entropy and its physical meaning” which is pretty good so far.