By Torben Struve, Research Postgraduate, Department of Earth Science & Engineering and Grantham Institute for Climate Change

How to start a retrospective on two amazing months at sea? Probably at the beginning! In the beginning there was…an idea! The idea was to reconstruct abrupt changes in chemistry and ocean circulation in the Equatorial Atlantic Ocean to learn about global climate and deep-water habitats. The plan was to do so by collecting sediments, seawater and deep sea corals and analysing all of these for their geochemical composition.

Developing this idea into our actual scientific cruise, JC094, took several years of planning and preparation, led by principal investigator and chief scientist Dr. Laura Robinson (University of Bristol) and funded by the European Research Council. The closer the day of embarkation, the busier the participants: on the one hand everyone has to pass medical examinations and safety training courses and on the other hand getting all scientific equipment sorted before leaving port is very important as it is too late to receive mail deliveries once at sea!

Left: The RRS James Cook at the dock in Tenerife (Photo by: Torben Struve). Right: Science party of expedition JC094. Standing row (left to right): Martin Bridger, James Cooper, Paul Morris, Lucy Woodall, Mélanie Douarin, Stephanie Bates, Michelle Taylor, Allison Jacobel, Veerle Huvenne, Leigh Marsh, Vanessa Fairbank, Kais Mohamed Falcon, Shannon Hoy, Maricel Williams, Peter Spooner, Laura Robinson, Marcus Badger. Sitting row: Jesse van der Grient, Kate Hendry, Torben Struve, Hong Chin Ng. (Photo by: Sam Crimmin)

We were lucky that our vessel, the 89.5 m long RRS James Cook, was docked in Southampton before our cruise, giving us the opportunity to spend a few days at the National Oceanographic Center (NOC) in Southampton to prepare the science facilities on board so that the labs and our equipment are ready-to-go once we were at sea. Our swimming laboratory, the RRS James Cook sailed ahead of us and we met her again for embarkation in Tenerife on the 13th October. On the afternoon of the 13th October we left the port of Tenerife. Although this was our last land experience for seven weeks every participant of this multi-national expedition (British, US, French, Dutch, Belgian, Malaysian, Spanish and German) was excited about finally launching JC094.

Our aim was to collect of a wide range of sample material in order to unravel modern and past secrets of the deep equatorial Atlantic Ocean.

The Atlantic Ocean is separated into two basins by the Mid-Atlantic Ridge (MAR which is part of a global sub-marine mountain range) allowing only restricted deep-water exchange between these basins via the Vema Fracture Zone. The measurement of modern seawater properties is crucial for achieving our scientific goals. The distribution patterns of deep-sea species in the modern ocean are poorly understood and are, besides seafloor topography most likely linked to seawater chemistry. Reconstructions of past ocean properties (paleoceanography) are based on proxies extracted from marine archives, i.e. past seawater properties are reconstructed with chemical tracers extracted for instance from marine carbonates like foraminifera (single-celled organisms) shells or deep-sea corals. Such proxy work relies on modern calibrations of the chemical tracer extracted from live specimen against seawater.

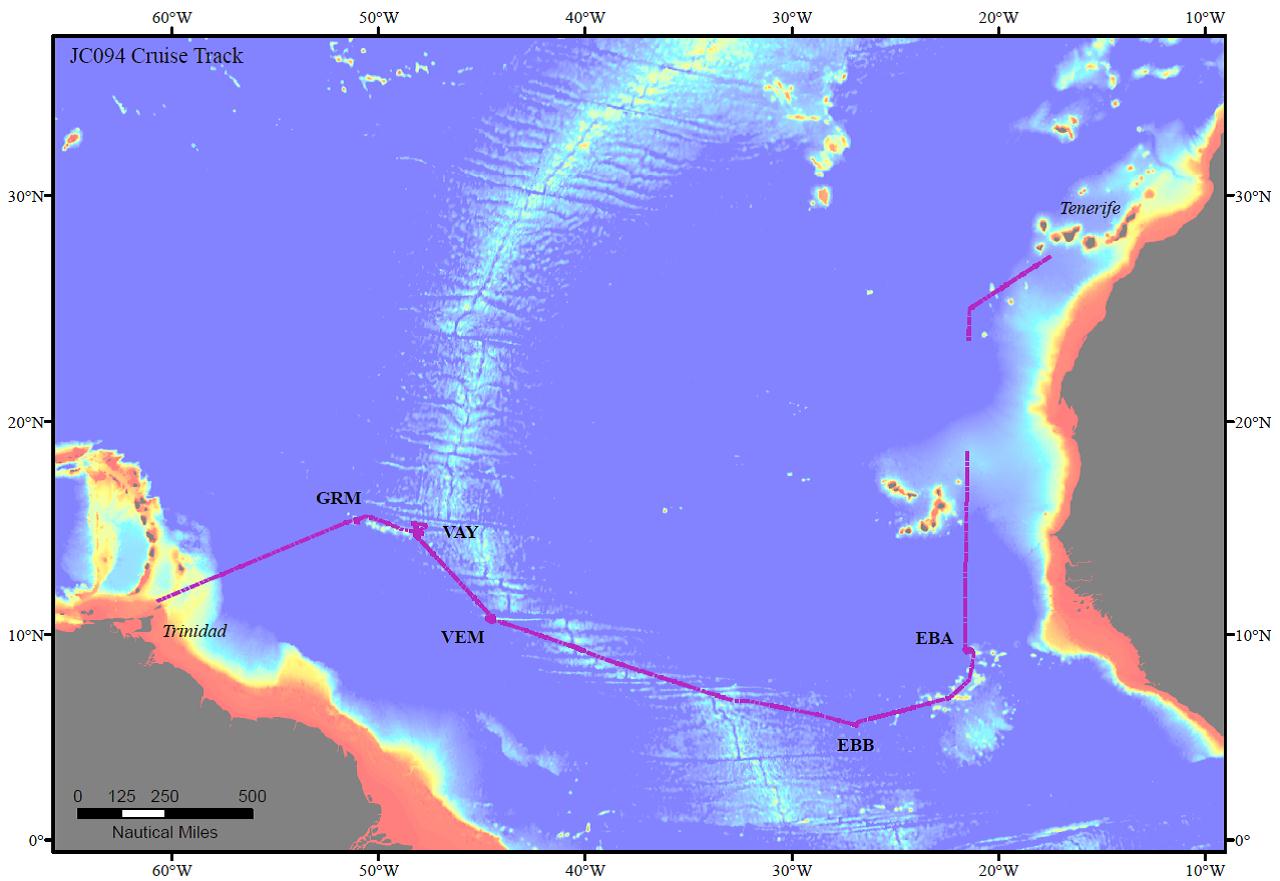

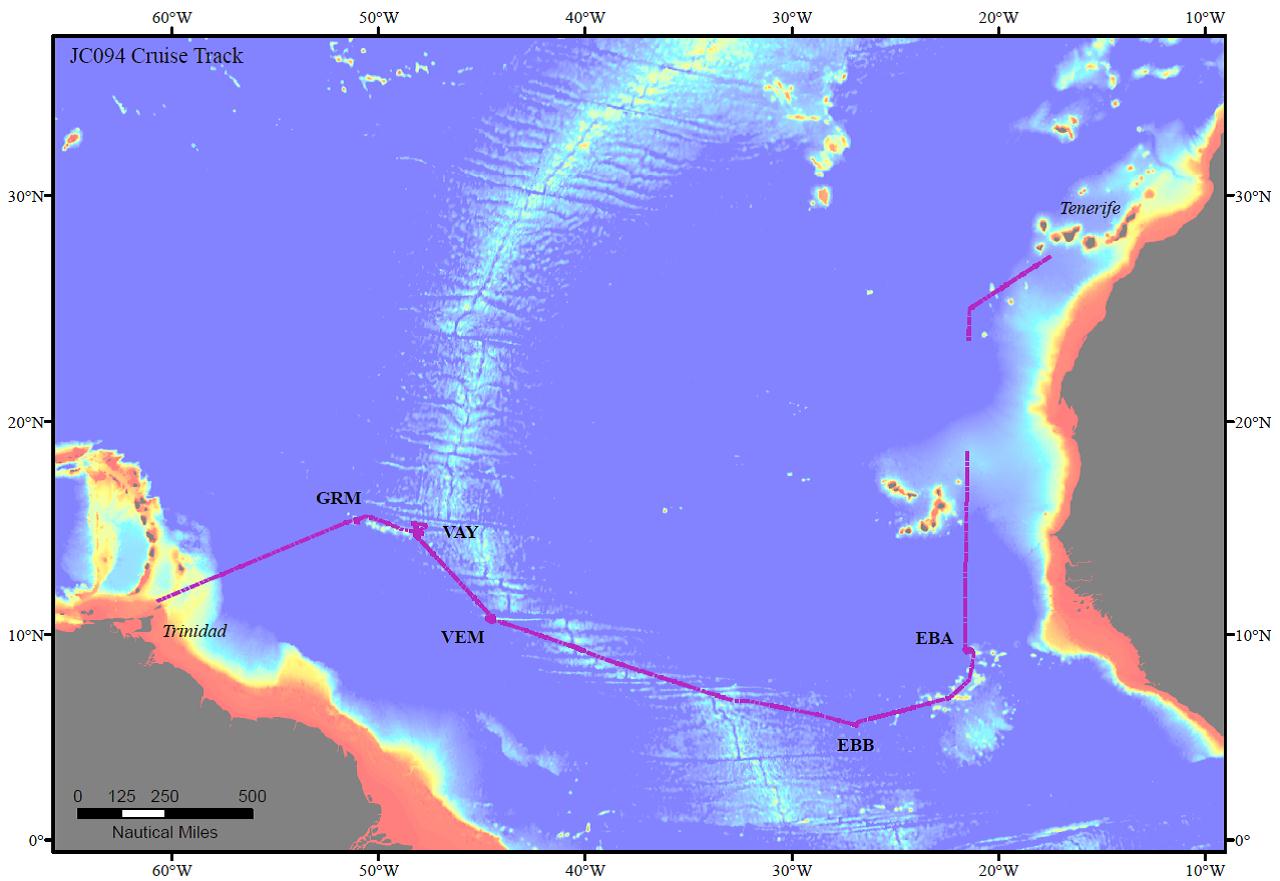

For this purpose we aimed to collect seawater, sediment and a wide range of biological samples including the most-desired deep-sea corals. Our five sampling locations spanned across the equatorial Atlantic from east to west: Carter and Knipovich seamounts in the eastern basin, the Vema fracture zone at the Mid-Atlantic Ridge and the Vayda and Gramberg seamounts in the western basin.

During the expedition the science party was divided into two 12 hour shifts from 4am(pm) to 4pm(am) covering a day’s 24 hour cycle. Each scientist was trained in various methods and techniques in order to help dealing with all the different types of sampling techniques applied during JC094: seawater sampling with a CTD rosette, hydroacoustic surveying, long and short coring as well as collecting and processing coral samples collected with the remotely operated vehicle (ROV) ISIS.

Cruise track of JC094 from Tenerife to Trinidad. EBA: Carter seamount; EBB: Knipovich seamount; VEM: Vema Fracture Zone; VAY: Vayda seamount; GRM: Gramberg seamount. (Map created by: Shannon Hoy)

Seawater sampling with a CTD rosette:

Seawater is usually sampled with a CTD rosette (conductivity-temperature-depth) measuring various seawater properties online and collecting seawater samples at particular depths with the 24 Niskin bottles attached to the frame. At every sample location we started our scientific program with a CTD profile. A CTD profile across the entire water column (~4500 m water depth) took about 4 hours making sample collection a time-consuming business. Once back on deck, the actual work started with sampling the Niskin bottles for dissolved oxygen, carbonate chemistry, radiocarbon, nutrients and trace elements following a strict scheme. This could usually be done within one 12 hour shift and the day shift (4 am to 4 pm) had the privilege of processing all CTD rosettes during JC094.

Meanwhile, the ship moved on for hydroacoustic surveying of the sampling location. Such hydroacoustic surveys are crucial to determine good locations for sediment coring and ROV dives since most deep-sea floor in the area has never been mapped.

Photo 3 (seawater): Procedure of seawater sampling with a CTD rosette. (1) Sensors reporting back to main lab computer, (2) recovery of the CTD rosette, (3,4) seawater sampling from Niskin bottles and (5) sealed and labeled seawater samples for oxygen isotope analyses (Photos by: (1,2) Torben Struve, (3) Mélanie Douarin, (4,5) Vanessa Fairbank)

Deep-sea sediment sampling:

The sediment coring efforts focused on recovery of surface material, and combined with long cores reaching back to at least the Last Glacial Maximum, i.e. 20,000 years ago. The rate of sediment deposition in the deep sea is on the order of 1-3 cm per 1,000 years and may be dominated by foraminiferal shells. During JC094 we used two different coring techniques: long coring and short coring.

Long coring allows deep penetration of a metal barrel (we used 12 m long barrels) into the sediment providing long sediment records. Once on deck, long cores are cut into 1.5 m segments, split into two halves and sub-sampled for chemical and physical analyses. As a result of the long coring technique the top part of the sediment column (sediment-seawater interface) is disturbed/lost.

Photo 5 (Long coring): Long coring work flow. (1) Coring device is back at the surface, (2) metal barrel needs to be aligned along starboard before it can be craned back on deck, (3) pulling the core liner (yellow tube) holding the sediments out of the metal barrel and cutting the liner into sections, (4) splitting the core liner sections into two halves: work and archive, (5) archive half of ~ five meter long sediment core and (6) D-tube which is used for long-term storage of sediment core sections. (Photos by: (1,2,4) Torben Struve, (3,5) Mélanie Douarin, (6) Stephanie Bates)

Megacoring allows collection of undisturbed short cores so that both coring techniques complement one another. Most of the short cores are sliced, bagged and stored right away whereas some have been investigated with respect to anthropogenic impact, i.e. microplastics.

Photo 4 (megacoring): Processing samples from a megacorer. (1) Recovering the megacorer, (2) a single megacore tube on the sediment extraction table, (3) slicing sediments of a megacore tube and (4) sliced and bagged sediment samples. (Photos by: (1) Hong Chin Ng, (2, 4) Mélanie Douarin, (3) Jesse van der Grient)

ROV (Remotely Operated Vehicle) dives:

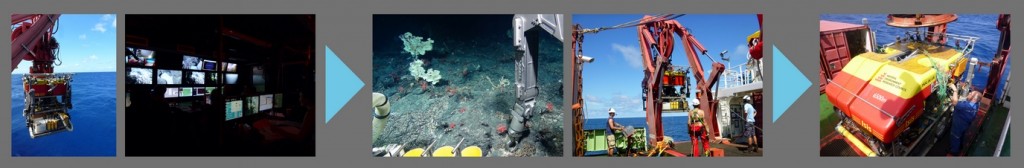

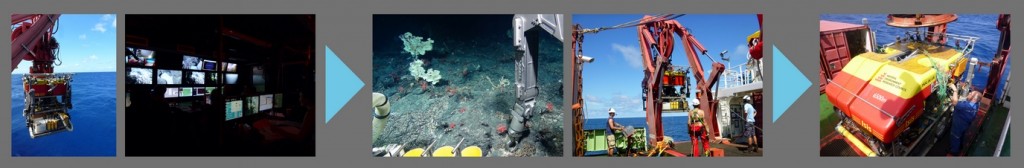

The main focus of this expedition was diving with the ROV (Remotely Operated Vehicle) ISIS which is basically a robot of the size of a small car connected to the ship with a cable. An onboard CTD reported seawater properties, various cameras allowed online seafloor observation, two robotic arms used various tools for selective sample collection and a hydroacoustic system allowed ultra-high resolution seafloor mapping. At any time during a dive, at least three scientists and two pilots (rotating with replacement teams) were in the control unit making sure that we got the most out of every single dive. Such dives could be quite long and during JC094 we also established a new record of longest ISIS diving time, i.e. 43 hours and 43 minutes!

Photo 6: Operating the ROV ISIS from RRS James Cook. (1) Deploying the ROV, (2) insight into the control unit on deck housing screens for the various cameras and instruments onboard ISIS, (3) sample collection at the seafloor with one of the two mechanical arms, (4) recovery of ISIS with the port side A-frame crane and (5) ISIS is back on deck with some unexpected bycatch: fishing lines. (Photos by: (1) Mélanie Douarin, (2) Torben Struve, (3) ISIS, (4,5) Vanessa Fairbank)

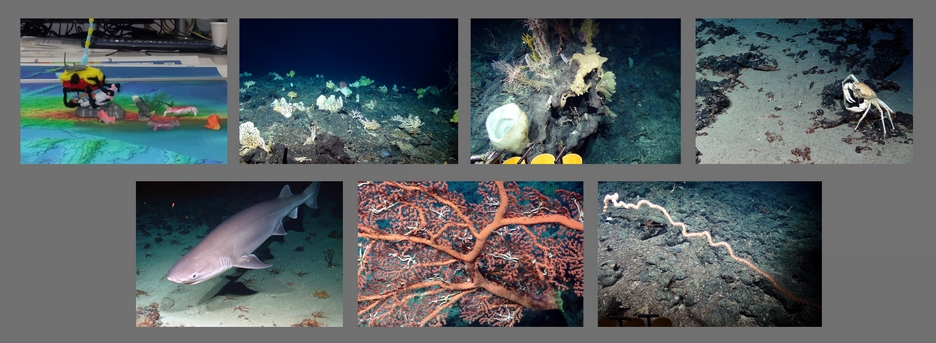

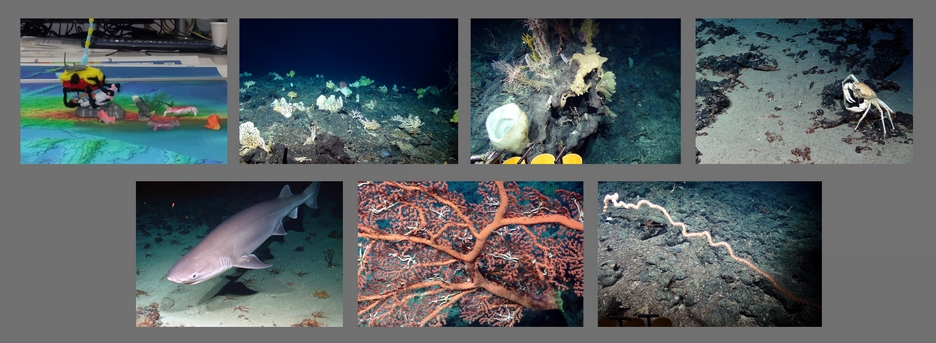

Our ROV dive efforts focused on the collection of live and fossil (i.e. dead) sample material, and in particular on deep sea corals. With regard to investigations of past ocean properties, deep sea corals have the advantage of growing in places where sediment deposition is either lacking or discontinuous, i.e. for instance on steep slopes of seamounts and in high current environments. Our cruise track across the Atlantic was designed to target seamounts peaking up to more than 4000 m from the seafloor allowing us to collect samples over a wide depth range. Live coral specimen are used for calibration and method development purposes so that such methods may eventually be applied to fossil deep sea corals revealing secrets about past ocean properties.

Besides deep-sea corals, live specimens of various types of deep-sea species have been collected for DNA analyses which allow drawing conclusions about deep-sea species’ distribution patterns.

Furthermore, we also ran ultra-high resolution seafloor and habitat mapping campaigns with the ROV, trying to investigate potential links between bathymetry and deep-sea species’ habitats. Such data may be combined with the seawater data and thus, unraveling major biogeographical relationships between deep-sea biology, hydrography and bathymetry.

Photo 7: Impressions of deep-sea ROV dives during JC094. (Photos by: (1) Jesse van der Grient, others by ISIS)

The sample recovery from the ROV on deck had to be done quickly: all biological samples including live and fossil deep-sea corals were transferred into the cold room lab for identification, separation and documentation. Sediment and seawater samples collected with the ROV were processed separately from the biological samples. The fossil corals were separated from live samples and transferred into the deck lab for drying, sorting and identification – one of the most puzzling tasks on board!

Photo 8: Processing samples collected with the ROV. (1) Sample recovery from the various trays and boxes on ISIS, (2) samples placed in buckets, sorted by water depth and location, (3) sorting, documenting and archiving of biological samples in the cold room, (4) fossil deep-sea coral samples are transferred from the cold room into the deck lab for drying and identification, (5) dried and sorted fossil coral samples waiting for (6) photographing and bagging. (Photos by: (1) Vanessa Fairbank, (2,6) Mélanie Douarin, (3,4) Torben Struve, (5) Hong Chin Ng)

So we moved across the Atlantic Ocean collecting thousands of samples during the seven weeks and everybody was involved in processing all types of samples, preventing the work to become monotonous. Eventually, it came the time to say goodbye and after seven amazing weeks on board RRS James Cook expedition JC094 ended in Port of Spain, Trinidad. Everybody carried home memories of a great experience and scientific success at sea. Now, we’re looking forward to receiving the samples for detailed chemical analyses.

Find out more on the Tropics project website.

Read The TROPICS research cruise from Tenerife to Trinidad: Tracing oceanic processes using corals and sediments in full

By Dr Simon Buckle, Grantham Institute

By Dr Simon Buckle, Grantham Institute